- Published on

Running container registries inside k8s

- Authors

- Name

- Luca Montechiesi

- lumontec

Loading your binaries inside k8s requires you to pull images from a container registry. Do you use a public one ? You need authentication, load secrets and get bored... Do you use a private one ? You have to make sure this is reachable from inside your cluster. Heck I just want to push my code.. How easy life would be if I could run my registry inside k8s itself, and pull the images from within ? With this hack you can, thanks to the oddities of NodePorts

The painful declarative way

One of the most loved / hated concepts about k8s lies in the way he allows / forces us to do pretty much everything in a declarative fashion. Pretty much decoupling the what from the how, it forces us to behave as selfish arrogant developers who only care about describing what we want ignoring who will take care of the steps required for satisfying our request. Yaml format only tell k8s what binaries we want to run, but in order for that to happen the container image has to exist in the registry, and must be pullable. Let's face it, this is painful, not for a single use cluster, but imagine yourself working on 10 different clusters with access to different networks, registries, secrets .. This is insanely boring maintainance .. At this point you start realizing that during early developement, where you generally need to push your image to dozens of environments is becoming a nightmare whenever you need to add a line to your container image or your binary. Developing operators is one specific example where this problem happens a lot, and sometimes you really need to deploy, and do it fast.

At that point I am sure some of you came to my same conclusion:

Wait a minute, k8s is the de facto system designed to run services, and a container registry is a service ... Problem solved !

Single step deploy solution

This is embarassingly easy, we just deploy our registry service to the cluster:

apiVersion: v1

kind: Namespace

metadata:

name: kube-registry

---

apiVersion: v1

kind: Service

metadata:

labels:

app: registry

name: registry

namespace: kube-registry

spec:

ports:

- nodePort: 30100

port: 5000

protocol: TCP

targetPort: 5000

selector:

app: registry

type: NodePort

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: registry-pvc

namespace: kube-registry

spec:

storageClassName: ""

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: registry

name: registry

namespace: kube-registry

spec:

replicas: 1

selector:

matchLabels:

app: registry

template:

metadata:

labels:

app: registry

spec:

containers:

- image: registry:2

imagePullPolicy: IfNotPresent

name: registry

volumeMounts:

- mountPath: /var/lib/registry

name: registry-vol

volumes:

- name: registry-vol

persistentVolumeClaim:

claimName: registry-pvc

Apply and heres what you get

$ kg all -n kube-registry

+ kubectl get all -n kube-registry

NAME READY STATUS RESTARTS AGE

pod/kube-registry-677b64f8-xxhq9 1/1 Running 0 20d

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kube-registry NodePort 172.20.128.62 <none> 5000:30100/TCP 94d

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/kube-registry 1/1 1 1 94d

NAME DESIRED CURRENT READY AGE

replicaset.apps/kube-registry-677b64f8 1 1 1 94d

Was too good to be true

As soon as we get our registry up and running that wonderful sensation of accomplishment immediately dies after we ask ourself the following questions:

- Now that I have my registry up and running, how will I push my image to this ?

- Second and most important, what value do I set for my Deployment image to pull from this registry ?

Let's go in order

Pushing to the registry

From the pushing perspective, remember that our registry is merely a service, exchanging data through http protocol. Then what we can easily leverage an ingress to communicate with it. Or even easier just port-forward to localhost and push there.

$ kubectl port-forward service/registry 30100:5000 -n kube-registry

Forwarding from 127.0.0.1:30100 -> 5000

Forwarding from [::1]:30100 -> 5000

Now we can push from another terminal, will use an example alpine image for convenience, we will simply push it to the service forwarded on our localhost:

$ docker pull alpine:latest

$ docker tag alpine:latest localhost:30100/alpine:latest

$ docker push localhost:30100/alpine:latest

The push refers to repository [localhost:30100/alpine]

4fc242d58285: Pushed

latest: digest: sha256:a777c9c66ba177ccfea23f2a216ff6721e78a662cd17019488c417135299cd89 size: 528

Pulling from the registry

This is the tricky part, and the one that will make your mind go into an infinite loop. let's start from a standard yaml pulling our image from an external registry:

apiVersion: v1

kind: Pod

metadata:

name: alpine

namespace: default

spec:

containers:

- image: alpine:latest

command:

- /bin/sh

- "-c"

- "sleep 60m"

imagePullPolicy: IfNotPresent

name: alpine

restartPolicy: Always

Our image: alpine:latest is by default pulled from docker.io/alpine:latest inside the cluster.

Fine, you will think, then if I want to pull from the kube-registry service I just need to make use of the usual convoluted form to access services from other namespaces: service-y.namespace-b.svc.cluster.local

...

containers:

- image: kube-registry.kube-registry.svc.cluster.local/alpine:latest

command:

...

This just feels very weird as you type it, and in the bottom of our heart we already know this won't work, but we are stupid and we try anyways because we are simple humans and we love to waste our time:

$ kubectl apply -f alpine.yaml

$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/alpine 0/1 ErrImagePull 0 8s

Let's have a look at the events:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 64s default-scheduler Successfully assigned default/alpine to ip-10-9-70-48.us-west-2.compute.internal

Normal Pulling 28s (x3 over 63s) kubelet Pulling image "kube-registry.kube-registry.svc.cluster.local/alpine:latest"

* Warning Failed 28s (x3 over 63s) kubelet Failed to pull image "kube-registry.kube-registry.svc.cluster.local/alpine:latest": rpc error: code = Unknown desc = Error response from daemon: Get "https://kube-registry.kube-registry.svc.cluster.local/v2/": dial tcp: lookup kube-registry.kube-registry.svc.cluster.local: no such host *

Warning Failed 28s (x3 over 63s) kubelet Error: ErrImagePull

Normal BackOff 2s (x4 over 63s) kubelet Back-off pulling image "kube-registry.kube-registry.svc.cluster.local/alpine:latest"

Warning Failed 2s (x4 over 63s) kubelet Error: ImagePullBackOff

Now we are starting to understand that we need to take a journey down the rabbit hole once again, so let's get into the guts of k8s.

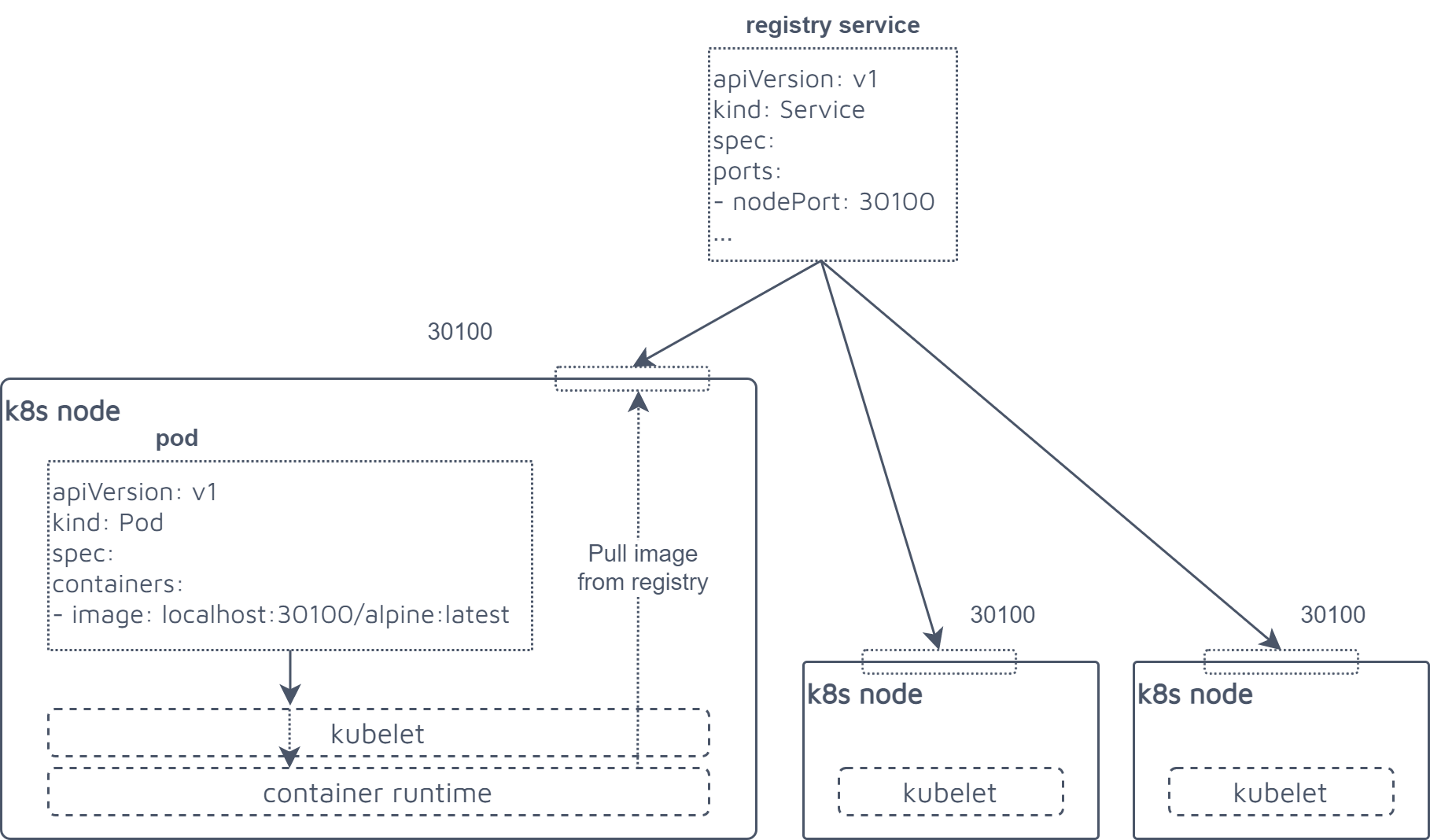

First obvious question that brings us in the guts of k8s, who pulls this image ? The answer is fairly simple: the kubelet is making use of the container runtime on the host, requesting it to pull from the specified registry.

But the problem is that the container runtime (dockerd, cri-o, or whatever), is outside of k8s and as such has no knowledge of who actually is kube-registry.kube-registry.svc.cluster.local/alpine:latest and how to resolve its dns name to an actual ip address of the pod. From having figured out the problem we are now completely empty handed, what a joyful moment.

NodePort happy that you exist

So at this point why not using one of the most mistreated functionalities of k8s ? NodePort is accused of being, and honestly feels like a freak of nature, breaking any security and authorization scheme, provides a quick shortcut for exposing stuff outside. Basically if you have a closer look to our deployment for the kube-registry we exposed the service with nodePort: 30100, which means that our registry will be available on any node of the cluster at this port.

Hell, this is immensely stupid, let's try it in one sec:

apiVersion: v1

kind: Pod

metadata:

name: alpine

namespace: default

spec:

containers:

- image: localhost:30100/alpine:latest

command:

- /bin/sh

- "-c"

- "sleep 60m"

imagePullPolicy: IfNotPresent

name: alpine

restartPolicy: Always

Apply it and magically:

$ kubectl apply -f alpine.yaml

pod/alpine created

$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/alpine 1/1 Running 0 45m

If you had an ingress set up, you could have achieved the same thing by exposing the registry as a public facing ingress, but that would have made things way more complex, and requiring the ingress to handle tls termination since the container runtime naturally pulls only from tls secured registries, with the execption of ones configured in the daemon.json, example:

{

"insecure-registries" : ["myregistrydomain.com:5000"]

}

The fact that we leverage a local endpoint for our registry allows us to pull without being tls since the runtime always trusts his own host. Since everybody likes watching drawings instead of reading boring stuff here you go.

Other funny games with NodePort

Once one gets used to play with k8s PVs and PVCs, one of the first things that I felt the urge to implement is an ubiquitous shared PVC that i can mount on any machine to access the same filesystem for multiple read and writes. To get this working quick and dirty I quickly jumped on the solution of implementing an internal nfs service, and pointing my volumes to this one.

This will quickly bring us to the very same problem, since the nfs client is baked inside the linux kernel, once again will not be able to resolve the k8s service name into an ip addres, yet it will always be capable of resolving the localhost. The bottom line of this then is that the previous technique can be applied for create a persistent shared storage available to anybody inside the k8s cluster.

This is just food for another post in case I will find the time or the need to go back to this technical topic.

End of the journey

I find this hacky registry deployment extremely useful, switching through different environments being able to always point my images to the same registry name feels like a dream, and avoids me the need to handle registry credentials. This is definitely not the secure and production way to run something, but sometimes we really just want our stuff to be pushed in a minute.

Let me know your thoughts on medium or check out my repos if you are curious about my projects.

Want to talk about this post? Discuss this on Medium →